Soundscapes of Text

What is 'Soundscapes of Text'?

This pilot

What is the larger project?

This pilot belongs to a larger project in development that aims to change the way we understand reading, and to explore new ways of thinking about the immersive book of the future. The idea that the move towards silent reading was finalised with a new technology invented in the Renaissance (print) lies at the heart of our cultural histories of the book from the mid-twentieth century. Our project began with a hunch that the technologies of voice and print in fact intersect in meaningful ways in this period. The new project aims: (1) to recover early voicescapes, from the theatre to the printing house in early modern London that helped to create early printed books conceived as live experiences and social events; (2) to recover, explore and analyse the internal voice of adult readers today; (3) to experiment with digital technologies to explore ways of understanding the relationship between text, meaning and sound to imagine and create future books that prioritise reading as experience. This pilot is our early contribution to aim (3).

What does the pilot do?

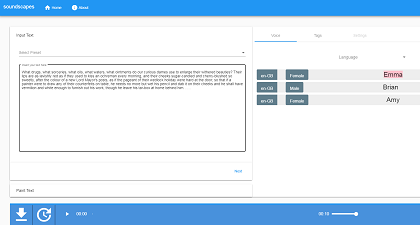

This pilot gives users a series of choices to explore their reading voice. After uploading some text - a few sentences is probably best - the user chooses a series of voices from a dropdown menu, and creates cues to explore the effect of their choices. They might start by choosing a voice to listen to: male/female; British/American. Then they can choose particular timbres, and also pace, prosody, and emphasis, exploring the relationship between sound (voice) and meaning. The software underpinning this pilot is AWS Polly, the same text-to-speech engine used for Amazon Alexa Smart Speakers.

What doesn't the pilot do?

First of all, this pilot does not look ‘nice’. We were not designing a tool for commercial use, but asking a question: can we make explicit the relationship between voice and meaning? And what software might we use to do that? AWS Polly is in development, and it is hoped that it will become more nuanced as time goes on. The downside is that the software is always being updated and that means our pilot needs regularly updating too. We are also concerned that the voices are not ‘human’ enough, and that the process of cueing a text is slow.

Principal Investigator: Jennifer Richards

Development: Matthew Nolf, Mark Turner

Development advisors: James Cummings, Tiago Sousa Garcia

Publications/presentations mentioning this pilot:

- Jennifer Richards and Tiago Sousa Garcia, 'Animating Text', presentation at 'C.A.K.E. 15: Digital Archives', the Mining Institute, Newcastle, 25th January 2018.