Meta-Research

CASCAde publishes a simulation for strength of evidence evaluation

CASCAde published a simulation on the strength of evidence of socio-technical studies in security and privacy as a Shiny app. It is drawn from a Systematic Literature Review of publications in the years 2006-2016. The simulation is built upon the same data used in the meta-research output Why Most Results of Socio-Technical Security User Studies Are False. The simluation offers three views: 1) False-Positive Risk, 2) Upper-Bound PPV and 3) Planning.

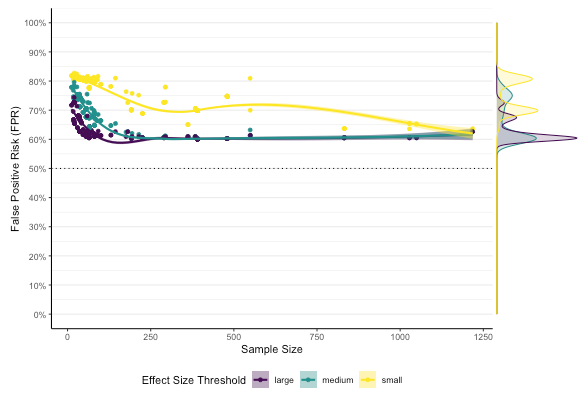

1) False-Positive Risk

This view estimates the False-Positive Risk of the existing sample of statistical tests, allowing the user to make assumptions about the effect size threshold expected in the population, the anticipated prior probability and the anticipated bias. The anticipated prior can be modified to estimate how exploritative or confirmative the studies are thought to be on average. The anticipated bias models how well-conducted the studies are, ranging from well-run random-controlled trials (RCTs), arguably few in the field, to biased studies. The outcome of these parameter choices is a view of the resulting false-positive risk found. A false positive-risk of less than 50% is desirable.

2) Upper-Bound PPV

The second panel produces heat-maps of the maximum Positive-Predictive Value (PPV) achievable by studies, assuming effect size thresholds in the population and prevalent biases in experiments chosen by the user.

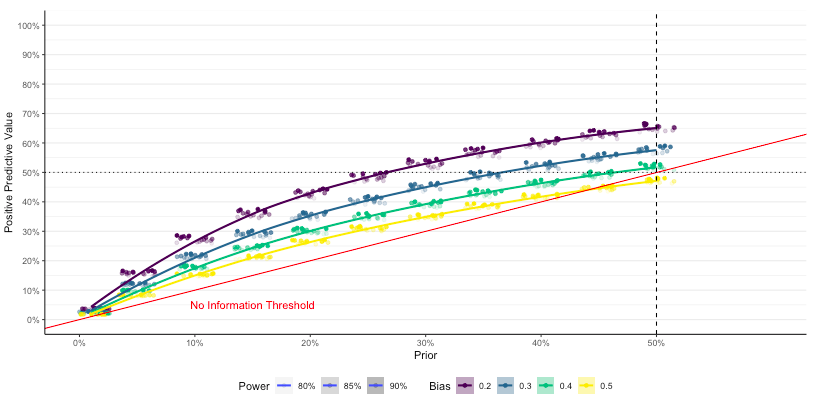

3) Planning

The planning panel enables researchers to plan ahead with the end in mind. One can choose parameters for a priori statistical power, anticipated bias, an estimated prior probability (derived from number of relations anticipated to be true or false). The a priori power can be determined with a dedicated planning tool, such as G*Power. One then obtains a graph simulating the consequences of these choices. The colored dot clusters and lines approximate the a posteriori distribution of the Positive Predictive Value, that is, the probability that positive reports are actually true in reality, given the experiment, by prior probability. The dashed line marks the prior anticipated by the user. The point at which the dashed line meets the PPV simulation indicates the likely strength of evidence of the planned experiment.

Why Most Results of Socio-Technical Security User Studies Are False PDF 885Kb

In recent years, cyber security user studies have been scrutinized for their reporting completeness, statistical reporting fidelity, statistical reliability and biases. It remains an open question what strength of evidence positive reports of such studies actually yield. We focus on the extent to which positive reports indicate relation true in reality, that is, a probabilistic assessment. This study aims at establishing the overall strength of evidence in cyber security user studies, with the dimensions Positive Predictive Value and its complement False Positive Risk, Likelihood Ratio, and Reverse-Bayesian Prior for a fixed tolerated False Positive Risk. Based on 431 coded statistical inferences in 146 cyber security user studies from a published SLR covering the years 2006-2016, we first compute a simulation of the a posteriori false positive risk based on assumed prior and bias thresholds. Second, we establish the observed likelihood ratios for positive reports. Third, we compute the reverse Bayesian argument on the observed positive reports by computing the prior required for a fixed a posteriori false positive rate. We obtain a comprehensive analysis of the strength of evidence including an account of appropriate multiple comparison corrections. The simulations show that even in face of well-controlled conditions and high prior likelihoods, only few studies achieve good a posteriori probabilities. Our work shows that the strength of evidence of the field is weak and that most positive reports are likely false. From this, we learn what to watch out for in studies to advance the knowledge of the field.

The definitive version of this work is to appear as Thomas Groß. Why Most Results of Socio-Technical Security User Studies Are False - And What to Do About it. In 12th International Workshop on Socio-Technical Aspects in Security (STAST), 2022. Best paper award.

Evidence-based Methods in Cyber Security Research PDF 6,000Kb

We've given this keynote at the first Annual UK Cyber Security PhD Winter School beginning of 2020. It considers the state-of-play of cyber security research, especially considering our investigation into cyber security user studies and offers recommendations for each area under consideration.

This relates to outputs in evidence-based methods/meta-research and WP4 Usable Security.

Statistical Reliability of 10 Years of Cyber Security User Studies PDF 885Kb

Abstract. In recent years, cyber security security user studies have been appraised in meta-research, mostly focusing on the completeness of their statistical inferences and the fidelity of their statistical reporting. However, estimates of the field's distribution of statistical power and its publication bias have not received much attention. We aim to estimate the effect sizes and their standard errors present as well as the implications on statistical power and publication bias. We built upon a published systematic literature review o 146 user studies in cyber security (2006--2016). We took into account 431 statistical inferences including t-, χ2,r-, one-way F-tests, and Z-tests. In addition, we coded the corresponding total sample sizes, group sizes and test families. Given these data, we established the observed effect sizes and evaluated the overall publication bias. We further computed the statistical power vis-à-vis of parametrized population thresholds to gain unbiased estimates of the power distribution. Results. We obtained a distribution of effect sizes and their conversion into comparable log odds ratios together with their standard errors. We further gained insights into key biases such as the publication bias and the winner's curse.

Note. The technical report appeared as Thomas Groß. Statistical Reliability of 10 Years of Cyber Security User Studies. arXiv:2010.02117, 2020.

The definitive version of this work is published as Thomas Groß. Statistical Reliability of 10 Years of Cyber Security User Studies. In Proceedings of the 10th International Workshop on Socio-Technical Aspects in Security (STAST'2020), LNCS, 11739, Springer Verlag, 2020.

Fidelity of Statistical Reporting in 10 Years of Cyber Security User Studies PDF 1,025Kb

Abstract. Studies in socio-technical aspects of security often rely on user studies and statistical inferences on investigated relations to make their case. To ascertain this capacity, we investigated the reporting fidelity of security user studies. Based on a systematic literature review of 114 user studies in cyber security from selected venues in the 10 years 2006-2016, we evaluated fidelity of the reporting of 1775 statistical inferences. We conducted a systematic classification of incomplete reporting, reporting inconsistencies and decision errors, leading to multinomial logistic regression (MLR). We found that half the cyber security user studies considered reported incomplete results, in stark difference to comparable results in a field of psychology. Our MLR on analysis outcomes yielded a slight increase of likelihood of incomplete tests over time, while SOUPS yielded a few percent greater likelihood to report statistics correctly than other venues. In this study, we offer the first fully quantitative analysis of the state-of-play of socio-technical studies in security.

Note. This technical report appeared as Thomas Groß. Fidelity of Statistical Reporting in 10 Years of Cyber Security User Studies. arXiv:2004.06672, 2020.

The definitive version of this work is published as Thomas Groß. Fidelity of Statistical Reporting in 10 Years of Cyber Security User Studies. In Proceedings of the 9th International Workshop on Socio-Technical Aspects in Security (STAST'2019), LNCS 11739, Springer Verlag, 2020, pp. 1-24

A Systematic Evaluation of Evidence-Based Methods in Cyber Security User Studies PDF 598Kb

Abstract. In the recent years, there has been a movement to strengthen evidence-based methods in cyber security under the flag of “science of security.” It is therefore an opportune time to take stock of the state-of-play of the field. We evaluated the state-of-play of evidence-based methods in cyber security user studies. We conducted a systematic literature review study of cyber security user studies from relevant venues in the years 2006-2016. We established a qualitative coding of the included sample papers with an a priori codebook of 9 indicators of reporting completeness. We further extracted effect sizes for papers with parametric tests on differences between means for a quantitative analysis of effect size distribution and post-hoc power. Results. We observed that only 21% of studies replicated existing methods while 78% provided the documentation to enable future replication. With respect to internal validity, we found that only 24% provided operationalization of research questions and hypotheses. We observed that reporting did largely not adhere to APA guidelines as relevant reporting standard: only 6% provided comprehensive reporting of results that would support meta-analysis. We, further, noticed a considerable reliance on p-value significance, where only 1% of the studies provided effect size estimates. Of the tests selected for quantitative analysis, 80% reported a trivial to small effect, while only 28% had post-hoc power > 80%. Only 16% were still statistically significant after Bonferroni correction for the multiple-comparisons made. This study offers a first evidence-based reflection on the state-of-play in the field and indicates areas that could help advancing the field’s research methodology.

Note. Kovila Coopamootoo and Thomas Groß. A Systematic Evaluation of Evidence-Based Methods in Cyber Security User Studies. Newcastle University Technical Report TR-1528, 2019