Participants

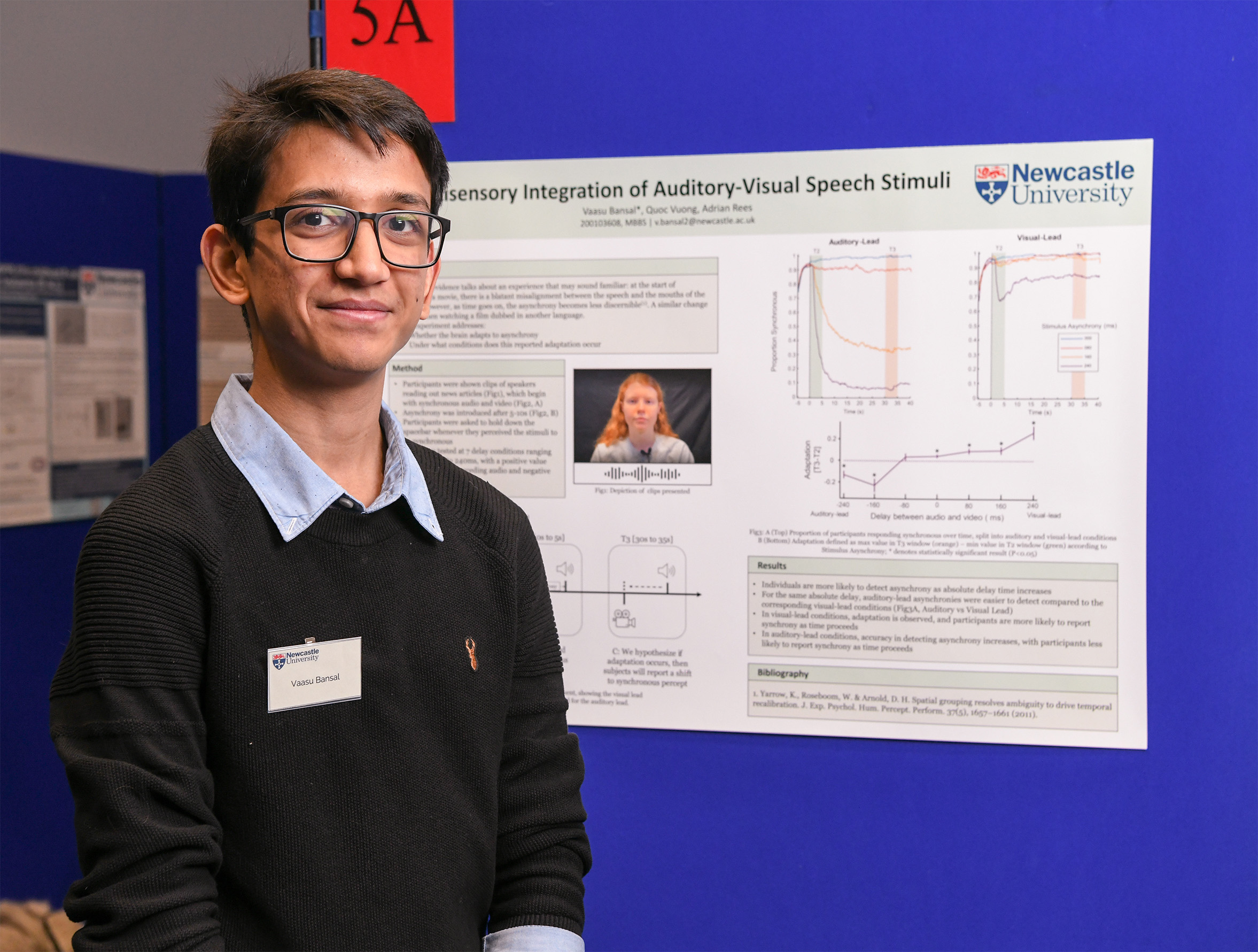

Vaasu Bansal

Vaasu Bansal

Historically, neuroscientists have studied our different senses in isolation because they appear to rely on independent brain pathways. But many natural stimuli activate multiple senses simultaneously and there is growing evidence for pathways and centres in the brain where our senses interact. The interactions between vision and hearing are important because they help us to hear better under difficult listening conditions.

In this study we aimed to address questions about auditory-visual sensory integration using natural faces and speech to study the interaction between speech and lip movements. The project aimed to answer whether the brain adapts to the temporal misalignment of sound and vision in audio-visual speech, and under what conditions this adaptation occurs.

The study was able to demonstrate adaptation when visual stimuli preceded the auditory component of speech by up to 240 milliseconds. In inverse conditions, no such adaptation was seen and accuracy in detecting asynchrony increased with an increase in delay.

Funded by: Newcastle University Research Scholarship

Project Supervisor: Adrian Rees